Assessing the reliability of LLM Vision in practical applications

LLM’s Vision capability allows us to analyse and interpret images.

Automating the analysis of images can be useful. Images may be sent as an attachment as part of a production workflow. It may be useful to analyse these.

How reliable and accurate is this analysis? We set out to test this, and here are our findings.

This Article’s Use Case

For whatever reason, we need to analyze images. Extract the sentiment and theme of the image, as well as the details within the image. This could include text, promotional details, and product items.

This analysis is part of an automated workflow. As such, the accuracy of the image analysis is paramount.

In this Article

- This Article’s Use Case

- In this Article

- Our Experiment Setup

- Images we analysed

- The Prompts

- The Tests - Simple Banner Image

- The Tests - Complex Catalogue Image - Zapier Automation

- Test 6 - Analyse the Catalogue as a PDF Using ChatGPT-4o and ChatGPT-4

- Conclusion

- TL;DR

- Article Resources

- Subscribe

- Contact Me

- References

- Recent Articles

Our Experiment Setup

We set up our experiment in the following manner:

- Analysed two image types;

- A banner image;

- A complex image of multiple promotional items;

- We analysed the images using:

- The ChatGPT web interface;

- Using ChatGPT-4o model;

- Using ChatGPT-4 (paid version);

- A Zapier automation triggered by a new email with attachments;

- Using the Vision event;

- Using the conversation with ChatGPT event;

- The ChatGPT web interface;

- As a bonus, we compared the image analysis with the analysis of a PDF that is the same as the catalogue image. For this test, we use ChatGPT-4o and ChatGPT-4.

- The prompts are engineered for image analysis. They are slightly nuanced for each image type. We go into more detail on the prompts below.

Images we analysed

We analysed the following images:

The Prompts

We apply prompt engineering techniques to our prompts.

In both cases, the role we assign is that of a software agent with expert skills in analysing images. A specific skill is that of interpreting and extracting text from the image.

In addition, the tasks we assign are nuance to the image type. The task for the complex catalogue image is such that it leads the LLM to understand that the image it is analysing is a complex catalogue with multiple product promotions. Adding this level of specificity to the prompts ensures a more accurate result.

Links to the prompts

The Tests - Simple Banner Image

Test 1 - Web Interface - Simple Banner - ChatGPT-4o

### Overview

The image is an advertisement banner featuring various grocery products displayed on a kitchen counter. The theme is focused on promoting "Month-end essentials," encouraging customers to save on pantry items. The design uses a bright yellow background on the right side, with bold black text to catch attention, and a clean, modern kitchen setting on the left to showcase the products.

### Text Elements

1. **Month-end essentials**

2. **Save on all your pantry essentials.**

3. **Shop Now**

4. **FreshPak**

5. **Ariel**

6. **Oros**

7. **Jacobs**

8. **Koo**

9. **Canola Oil**

### Product and Text Elements:

| Product | Text Elements | Confidence Value |

|---|---|---|

| FreshPak Rooibos Tea | FreshPak | 5 |

| Ariel Detergent | Ariel | 5 |

| Oros Orange Squash | Oros | 5 |

| Jacobs Coffee | Jacobs | 5 |

| Koo Baked Beans | Koo | 5 |

| Canola Oil | Canola Oil | 5 |

| Product Unrecognised | 100% Recycled Paper Towel (Roller) | 4 |

### Confidence Value

My confidence in the accuracy of this analysis is **5**.

Test 2 - Web Interface - Simple Banner - ChatGPT-4

### Overview:

The image is an advertisement for "Month-end essentials" featuring various pantry products. The background is a bright, clean kitchen setting, while the right side of the image has a vibrant yellow section with the promotional text. The theme focuses on essential household and grocery items available for purchase.

### Text Elements:

- "Month-end essentials"

- "Save on all your pantry essentials."

- "Shop Now"

- "NEW COLOUR PROTECT"

- "100% ROOIBOS TEA"

- "PURE ROOIBOS"

- "CANOLA OIL"

- "GOLD"

### Identified Products:

| Product | Text Elements | Confidence Value |

|---|---|---|

| Oros Orange Squash | OROS | 5 |

| Freshpak Rooibos Tea | FreshPak, 100% ROOIBOS TEA, PURE ROOIBOS | 5 |

| Canola Oil | CANOLA OIL, GOLD | 5 |

| Jacobs Coffee | JACOBS | 5 |

| Ariel Laundry Detergent | ARIEL, NEW COLOUR PROTECT | 5 |

| Koo Green Beans | KOO | 5 |

| Roller Towel (Brand Unclear) | 100 | 3 |

### Confidence Evaluation:

- Confidence Value: 5 for most products as they are clearly recognizable and matched with their respective text elements.

- Confidence Value: 3 for the Roller Towel as the brand is not clearly identifiable from the image.

My Observations

- The analysis is 'generally accurate'. Perhaps 80% of the detail is reflected;

- The overview and theme analysis is good;

- Running the same test within the same model, multiple times, produced slight variations;

- ChatGPT-4 produced more depth of information.

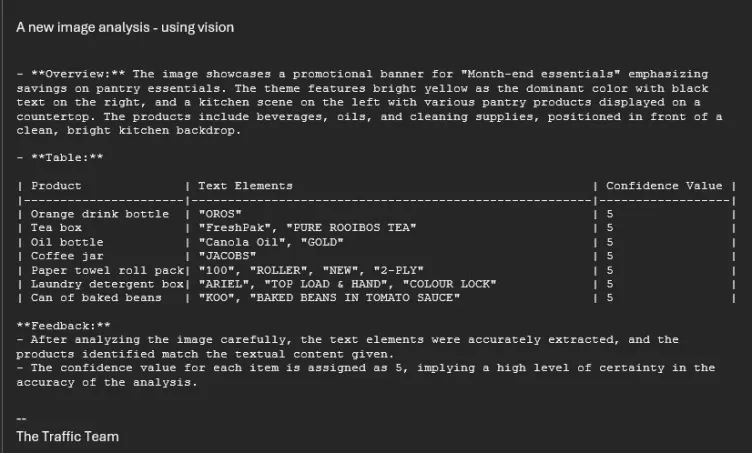

Test 3 - The Above Compared with Zapier Automation Vision Event

We sent the same banner image as an attachment to a Zapier automation.

The Zap

Step 4 of the Zap sends a report via email. Below is the report.

My Observations

The analysis from the Zapier automation using Vision is very good. Better than the analysis using the web interface.

Why is the output better?

Within Zapier, we can adjust and specify the tokens and temperature.

- Increasing the tokens ensures that the analysis does not get truncated;

- Lowering the temperature ensures a more literal analysis. A higher temperature allows more creativity, variance and 'interpretation' in the LLM response.

The Tests - Complex Catalogue Image - Zapier Automation

We created two Zaps that automated the image analysis.

The Zap Flow

- Trigger — New Email Matching Search;

- Event: Search phrase for the trigger:

- Vision: subject:catalogue-analysis-vision;

- ChatGPT-4o: subject:vision-4-o;

- Event: Search phrase for the trigger:

- Google Drive;

- Event: Save file to Google Drive;

- ChatGPT;

- Event 1: Analyse image with Vision;

- Event 2: Conversation with ChatGPT;

- Gmail;

- Event: New Email.

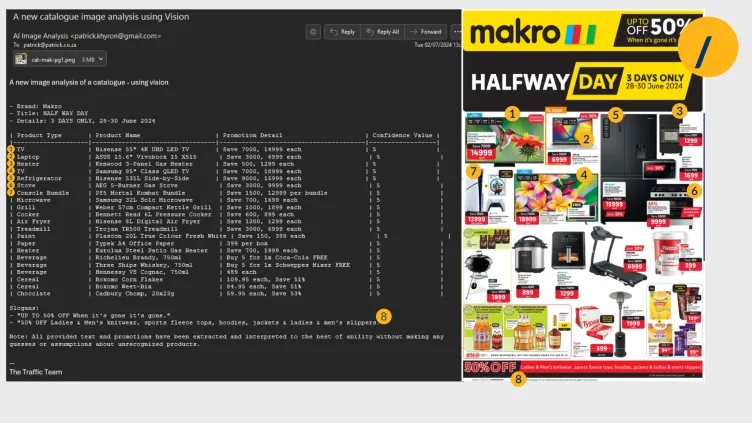

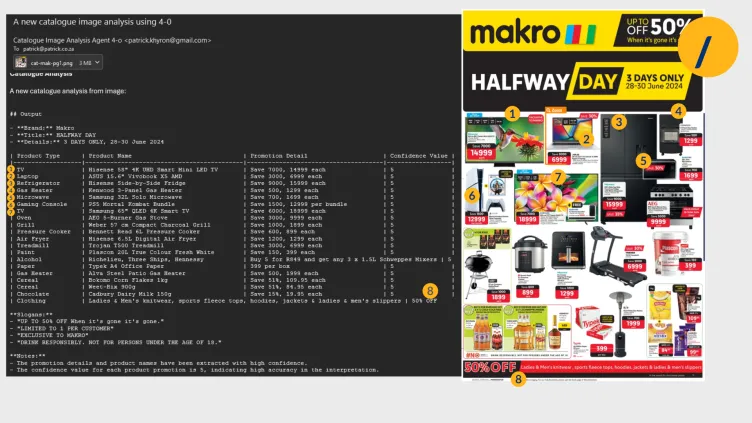

Test 4 - Zapier Automation - Complex Catalogue - OpenAI Vision

We sent the complex catalogue image as an attachment to a Zapier automation.

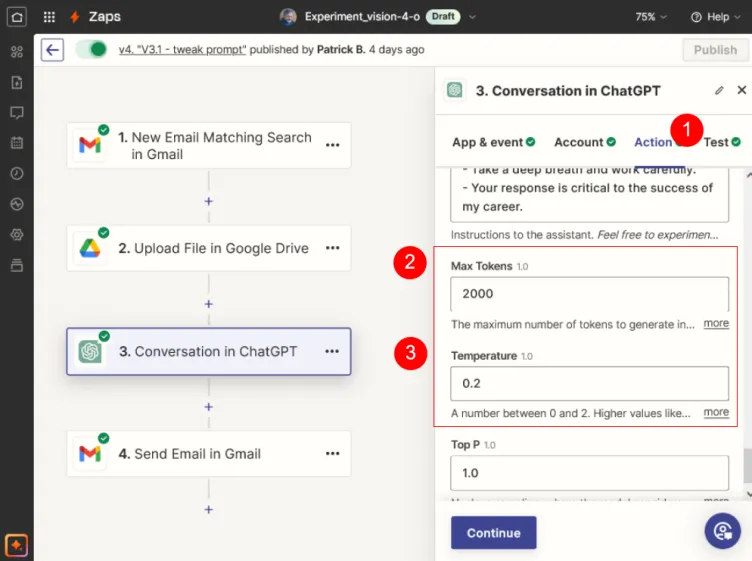

Test 5 - Zapier Automation - Complex Catalogue - OpenAI Conversation ChatGPT-4o

We sent the complex catalogue image as an attachment to a Zapier automation and used the ChatGPT Conversation event.

View the text version of the report.

My Observations

- The analysis of both tests was good;

- Both models were not 100% accurate in terms of the detail;

- Test 5 - using the Conversation Event produced a result with more depth.

The Marked Differences Between the Vision and Conversation Events

Using the Conversation event allowed for much more flexibility. Using the Conversation event, we are able to:

- Choose the model;

- Set the Tokens, Temperature, and Top p;

- Send a User (System) message as well as Assistant instructions.

The Vision event's options are limited:

- We can send instructions as a single prompt only;

- We can only adjust the tokens.

Test 6 - Analyse the Catalogue as a PDF Using ChatGPT-4o and ChatGPT-4

As a final test, we analysed the same complex catalogue image, but as a PDF.

We used Gpt-4o and GPT-4

PDF Analysis Using ChatGPT-4o

PDF Analysis Using ChatGPT-4

My Observations

While there is the impression of more depth, there is definitely more 'detail', the result is the least reliable.

- The Brand was given as Steelhill, not Makro;

- ChatGPT-4o and ChatGPT-4 produced almost identical results;

- While the product type was generally correct, the Brand Name is 'unrecognised' or incorrect;

- The relationship between the product and promotional details, in some cases, is confused;

- The details of the Typek and Alva Heater are confused.

- Brand names, which are graphical elements in the PDF, are not recognised.

Conclusion

This process gives me a good contextual understanding of the capacity and reliability of ChatGPT Vision. It is important to understand these tools on this level.

- Analysing general context, theme, colours, and composition, Vision is good;

- Vision is 'generally good' at extracting text detail from images. This is not 100% accurate nor 100% complete;

- Perhaps this could be improved with a more optimised prompt and iterations within the same test;

- As such, it would not be useful if accuracy and completeness is a requirement.

TL;DR

This article looks into the accuracy of LLMs in image analysis.

We analyse two image types, a simple web banner and a complex promotional catalogue for a retail chain.

We compare two environments with a mix of different models:

- ChatGPT Web Interface;

- ChatGPT-4o;

- ChatGPT-4 (paid);

- Zapier Automation using Gmail and ChatGPT;

- Event a: Analyse image with Vision;

- Event b: Conversation using GPT4-o.

Our observations are:

- Analysis of the theme, context, colours and composition is good;

- Analysis of the detail and detailed text elements is generally good yet:

- Not 100% accurate or complete;

- Inconsistent and varied in the inconsistency;

Article Resources

Subscribe

Explore the practical possibilities of AI for your business. Subscribe to our newsletter for insights and discussions on potential AI strategies and how they are adopted.

Contact Me

I can help you with your:

- Zapier Automations;

- AI strategy;

- Prompt engineering;

- Content creation;

- Custom GPTs.

I am available for remote freelance work. Please contact me.

Recent Articles

The following articles are of interest:

Add new comment